我們的貼標(biāo)機(jī)都是由自己的工廠生產(chǎn)的,沒有中間商賺差價(jià),因此價(jià)格更加優(yōu)惠,還能提供更加專業(yè)的技術(shù)支持和售后服務(wù)。

我們的貼標(biāo)機(jī)采用高品質(zhì)的材料和先進(jìn)的生產(chǎn)工藝,嚴(yán)格按照國(guó)際質(zhì)量標(biāo)準(zhǔn)進(jìn)行生產(chǎn)和檢測(cè),確保產(chǎn)品的品質(zhì)和性能穩(wěn)定可靠。

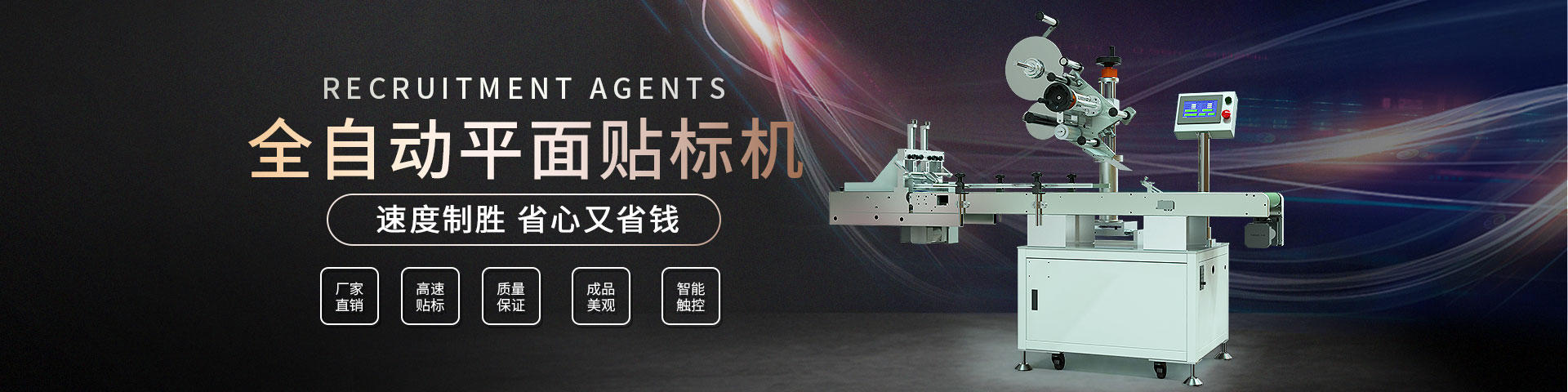

我們的貼標(biāo)機(jī)可以根據(jù)客戶的具體需求進(jìn)行定制,包括貼標(biāo)速度、標(biāo)簽尺寸、貼附位置等多個(gè)方面,滿足不同行業(yè)和產(chǎn)品的貼標(biāo)需求。

我們提供完善的售后服務(wù),包括安裝調(diào)試、培訓(xùn)、保修等多項(xiàng)服務(wù),確保客戶能夠順利使用我們的貼標(biāo)機(jī),并在使用過(guò)程中得到及時(shí)的技術(shù)支持和維護(hù)。我們還提供定期的維護(hù)和保養(yǎng)服務(wù),延長(zhǎng)貼標(biāo)機(jī)的使用壽命。

九舟智能自動(dòng)化,集研發(fā)、設(shè)計(jì)、生產(chǎn)、銷售、配件、服務(wù)為一體的創(chuàng)新型廠家。是國(guó)內(nèi)領(lǐng)先的自動(dòng)化設(shè)備與自動(dòng)化生產(chǎn)解決方案供應(yīng)商。以人為本,用心同行,共同成長(zhǎng)。人性化、系統(tǒng)化的科學(xué)管理,讓九舟擁有了一支專業(yè)的技術(shù)研發(fā)團(tuán)隊(duì),強(qiáng)大的經(jīng)營(yíng)管理團(tuán)隊(duì),優(yōu)秀的售服平臺(tái)。自主研發(fā),不斷創(chuàng)新,九舟專注于智能自動(dòng)化設(shè)備的優(yōu)化、升級(jí)、生產(chǎn),配件供應(yīng),為中國(guó)自動(dòng)化生產(chǎn)設(shè)備力創(chuàng)世界一流品牌。

了解詳情